Using StringView / German Style Strings to make Queries Faster: Part 2 - String Operations

Posted on: Fri 13 September 2024 by Xiangpeng Hao, Andrew Lamb

Editor's Note: This blog series was first published on the InfluxData blog. Thanks to InfluxData for sponsoring this work as Xiangpeng Hao's summer intern project

In the first post, we discussed the nuances required to accelerate Parquet loading using StringViewArray by reusing buffers and reducing copies. In this second part of the post, we describe the rest of the journey: implementing additional efficient operations for real query processing.

Faster String Operations¶

Faster comparison¶

String comparison is ubiquitous; it is the core of

cmp,

min/max,

and like/ilike kernels. StringViewArray is designed to accelerate such comparisons using the inlined prefix—the key observation is that, in many cases, only the first few bytes of the string determine the string comparison results.

For example, to compare the strings InfluxDB with Apache DataFusion, we only need to look at the first byte to determine the string ordering or equality. In this case, since A is earlier in the alphabet than I, Apache DataFusion sorts first, and we know the strings are not equal. Despite only needing the first byte, comparing these strings when stored as a StringArray requires two memory accesses: 1) load the string offset and 2) use the offset to locate the string bytes. For low-level operations such as cmp that are invoked millions of times in the very hot paths of queries, avoiding this extra memory access can make a measurable difference in query performance.

For StringViewArray, typically, only one memory access is needed to load the view struct. Only if the result can not be determined from the prefix is the second memory access required. For the example above, there is no need for the second access. This technique is very effective in practice: the second access is never necessary for the more than 60% of real-world strings which are shorter than 12 bytes, as they are stored completely in the prefix.

However, functions that operate on strings must be specialized to take advantage of the inlined prefix. In addition to low-level comparison kernels, we implemented a wide range of other StringViewArray operations that cover the functions and operations seen in ClickBench queries. Supporting StringViewArray in all string operations takes quite a bit of effort, and thankfully the Arrow and DataFusion communities are already hard at work doing so (see https://github.com/apache/datafusion/issues/11752 if you want to help out).

Faster takeandfilter¶

After a filter operation such as WHERE url <> '' to avoid processing empty urls, DataFusion will often coalesce results to form a new array with only the passing elements.

This coalescing ensures the batches are sufficiently sized to benefit from vectorized processing in subsequent steps.

The coalescing operation is implemented using the take and filter kernels in arrow-rs. For StringArray, these kernels require copying the string contents to a new buffer without “holes” in between. This copy can be expensive especially when the new array is large.

However, take and filter for StringViewArray can avoid the copy by reusing buffers from the old array. The kernels only need to create a new list of views that point at the same strings within the old buffers.

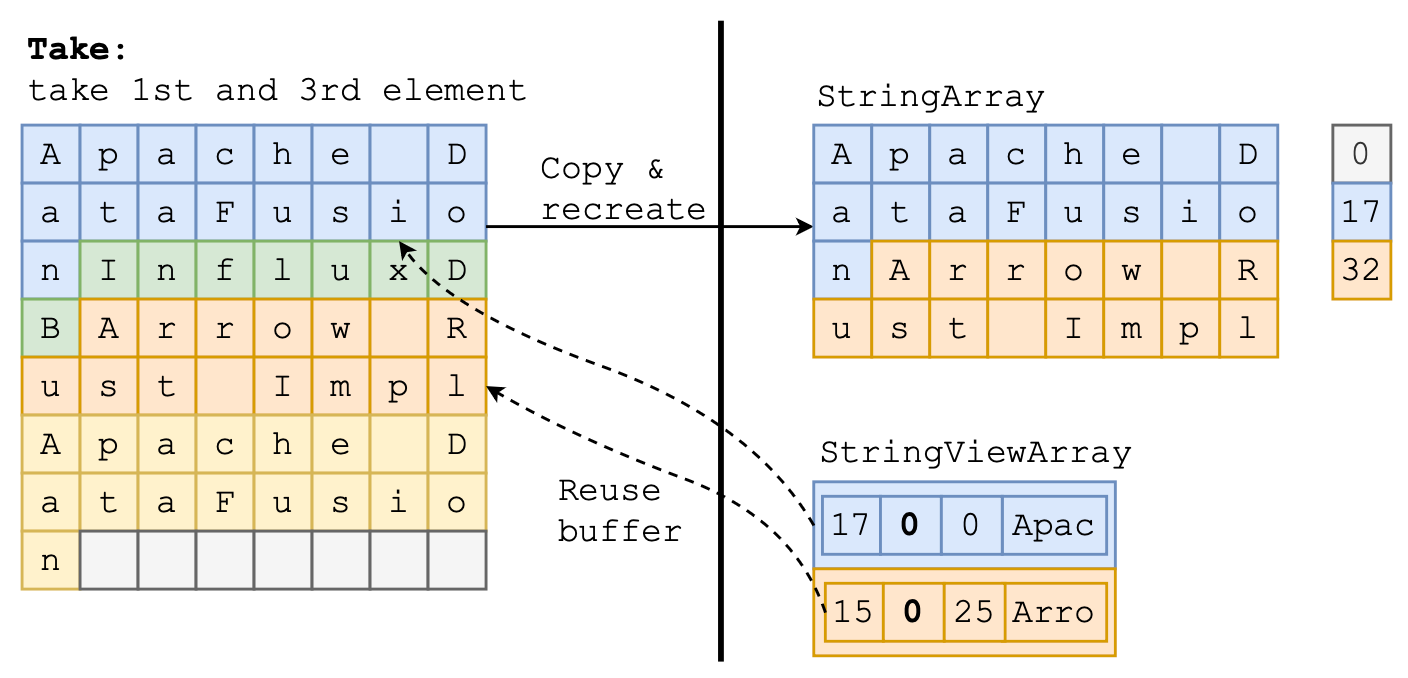

Figure 1 illustrates the difference between the output of both string representations. StringArray creates two new strings at offsets 0-17 and 17-32, while StringViewArray simply points to the original buffer at offsets 0 and 25.

Figure 1: Zero-copy take/filter for StringViewArray

When to GC?¶

Zero-copy take/filter is great for generating large arrays quickly, but it is suboptimal for highly selective filters, where most of the strings are filtered out. When the cardinality drops, StringViewArray buffers become sparse—only a small subset of the bytes in the buffer’s memory are referred to by any view. This leads to excessive memory usage, especially in a filter-then-coalesce scenario. For example, a StringViewArray with 10M strings may only refer to 1M strings after some filter operations; however, due to zero-copy take/filter, the (reused) 10M buffers can not be released/reused.

To release unused memory, we implemented a garbage collection (GC) routine to consolidate the data into a new buffer to release the old sparse buffer(s). As the GC operation copies strings, similarly to StringArray, we must be careful about when to call it. If we call GC too early, we cause unnecessary copying, losing much of the benefit of StringViewArray. If we call GC too late, we hold large buffers for too long, increasing memory use and decreasing cache efficiency. The Polars blog on StringView also refers to the challenge presented by garbage collection timing.

arrow-rs implements the GC process, but it is up to users to decide when to call it. We leverage the semantics of the query engine and observed that the CoalesceBatchesExec operator, which merge smaller batches to a larger batch, is often used after the record cardinality is expected to shrink, which aligns perfectly with the scenario of GC in StringViewArray.

We, therefore, implemented the GC procedure inside CoalesceBatchesExec[^5] with a heuristic that estimates when the buffers are too sparse.

The art of function inlining: not too much, not too little¶

Like string inlining, function inlining is the process of embedding a short function into the caller to avoid the overhead of function calls (caller/callee save).

Usually, the Rust compiler does a good job of deciding when to inline. However, it is possible to override its default using the #[inline(always)] directive.

In performance-critical code, inlined code allows us to organize large functions into smaller ones without paying the runtime cost of function invocation.

However, function inlining is not always better, as it leads to larger function bodies that are harder for LLVM to optimize (for example, suboptimal register spilling) and risk overflowing the CPU’s instruction cache. We observed several performance regressions where function inlining caused slower performance when implementing the StringViewArray comparison kernels. Careful inspection and tuning of the code was required to aid the compiler in generating efficient code. More details can be found in this PR: https://github.com/apache/arrow-rs/pull/5900.

Buffer size tuning¶

StringViewArray permits multiple buffers, which enables a flexible buffer layout and potentially reduces the need to copy data. However, a large number of buffers slows down the performance of other operations.

For example, get_array_memory_size needs to sum the memory size of each buffer, which takes a long time with thousands of small buffers.

In certain cases, we found that multiple calls to concat_batches lead to arrays with millions of buffers, which was prohibitively expensive.

For example, consider a StringViewArray with the previous default buffer size of 8 KB. With this configuration, holding 4GB of string data requires almost half a million buffers! Larger buffer sizes are needed for larger arrays, but we cannot arbitrarily increase the default buffer size, as small arrays would consume too much memory (most arrays require at least one buffer). Buffer sizing is especially problematic in query processing, as we often need to construct small batches of string arrays, and the sizes are unknown at planning time.

To balance the buffer size trade-off, we again leverage the query processing (DataFusion) semantics to decide when to use larger buffers. While coalescing batches, we combine multiple small string arrays and set a smaller buffer size to keep the total memory consumption low. In string aggregation, we aggregate over an entire Datafusion partition, which can generate a large number of strings, so we set a larger buffer size (2MB).

To assist situations where the semantics are unknown, we also implemented a classic dynamic exponential buffer size growth strategy, which starts with a small buffer size (8KB) and doubles the size of each new buffer up to 2MB. We implemented this strategy in arrow-rs and enabled it by default so that other users of StringViewArray can also benefit from this optimization. See this issue for more details: https://github.com/apache/arrow-rs/issues/6094.

End-to-end query performance¶

We have made significant progress in optimizing StringViewArray filtering operations. Now, let’s test it in the real world to see how it works!

Let’s consider ClickBench query 22, which selects multiple string fields (URL, Title, and SearchPhase) and applies several filters.

We ran the benchmark using the following command in the DataFusion repo. Again, the --string-view option means we use StringViewArray instead of StringArray.

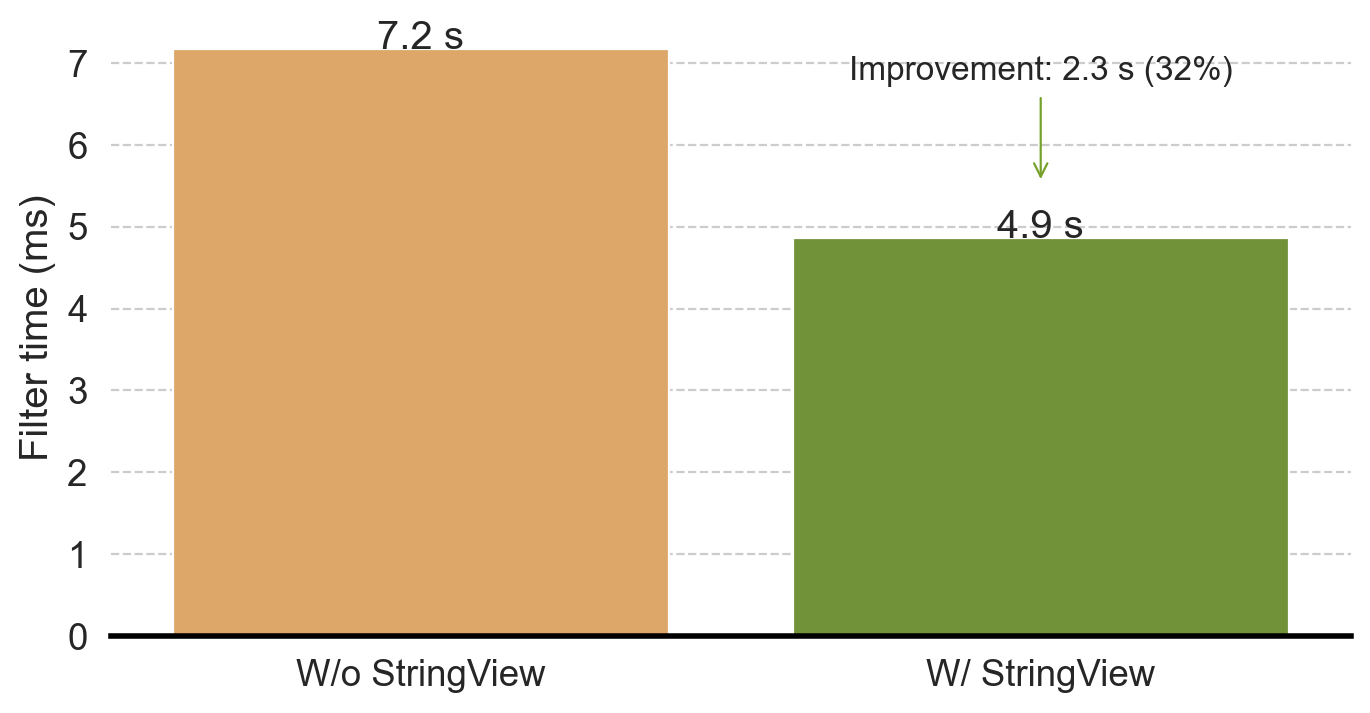

To eliminate the impact of the faster Parquet reading using StringViewArray (see the first part of this blog), Figure 2 plots only the time spent in FilterExec. Without StringViewArray, the filter takes 7.17s; with StringViewArray, the filter only takes 4.86s, a 32% reduction in time. Moreover, we see a 17% improvement in end-to-end query performance.

Figure 2: StringViewArray reduces the filter time by 32% on ClickBench query 22.

Faster String Aggregation¶

So far, we have discussed how to exploit two StringViewArray features: reduced copy and faster filtering. This section focuses on reusing string bytes to repeat string values.

As described in part one of this blog, if two strings have identical values, StringViewArray can use two different views pointing at the same buffer range, thus avoiding repeating the string bytes in the buffer. This makes StringViewArray similar to an Arrow DictionaryArray that stores Strings—both array types work well for strings with only a few distinct values.

Deduplicating string values can significantly reduce memory consumption in StringViewArray. However, this process is expensive and involves hashing every string and maintaining a hash table, and so it cannot be done by default when creating a StringViewArray. We introduced an opt-in string deduplication mode in arrow-rs for advanced users who know their data has a small number of distinct values, and where the benefits of reduced memory consumption outweigh the additional overhead of array construction.

Once again, we leverage DataFusion query semantics to identify StringViewArray with duplicate values, such as aggregation queries with multiple group keys. For example, some ClickBench queries group by two columns:

UserID(an integer with close to 1 M distinct values)MobilePhoneModel(a string with less than a hundred distinct values)

In this case, the output row count iscount(distinct UserID) * count(distinct MobilePhoneModel), which is 100M. Each string value of MobilePhoneModel is repeated 1M times. With StringViewArray, we can save space by pointing the repeating values to the same underlying buffer.

Faster string aggregation with StringView is part of a larger project to improve DataFusion aggregation performance. We have a proof of concept implementation with StringView that can improve the multi-column string aggregation by 20%. We would love your help to get it production ready!

StringView Pitfalls¶

Most existing blog posts (including this one) focus on the benefits of using StringViewArray over other string representations such as StringArray. As we have discussed, even though it requires a significant engineering investment to realize, StringViewArray is a major improvement over StringArray in many cases.

However, there are several cases where StringViewArray is slower than StringArray. For completeness, we have listed those instances here:

- Tiny strings (when strings are shorter than 8 bytes): every element of the StringViewArray consumes at least 16 bytes of memory—the size of the

viewstruct. For an array of tiny strings, StringViewArray consumes more memory than StringArray and thus can cause slower performance due to additional memory pressure on the CPU cache. - Many repeated short strings: Similar to the first point, StringViewArray can be slower and require more memory than a DictionaryArray because 1) it can only reuse the bytes in the buffer when the strings are longer than 12 bytes and 2) 32-bit offsets are always used, even when a smaller size (8 bit or 16 bit) could represent all the distinct values.

- Filtering: As we mentioned above, StringViewArrays often consume more memory than the corresponding StringArray, and memory bloat quickly dominates the performance without GC. However, invoking GC also reduces the benefits of less copying so must be carefully tuned.

Conclusion and Takeaways¶

In these two blog posts, we discussed what it takes to implement StringViewArray in arrow-rs and then integrate it into DataFusion. Our evaluations on ClickBench queries show that StringView can improve the performance of string-intensive workloads by up to 2x.

Given that DataFusion already performs very well on ClickBench, the level of end-to-end performance improvement using StringViewArray shows the power of this technique and, of course, is a win for DataFusion and the systems that build upon it.

StringView is a big project that has received tremendous community support. Specifically, we would like to thank @tustvold, @ariesdevil, @RinChanNOWWW, @ClSlaid, @2010YOUY01, @chloro-pn, @a10y, @Kev1n8, @Weijun-H, @PsiACE, @tshauck, and @xinlifoobar for their valuable contributions!

As the introduction states, “German Style Strings” is a relatively straightforward research idea that avoid some string copies and accelerates comparisons. However, applying this (great) idea in practice requires a significant investment in careful software engineering. Again, we encourage the research community to continue to help apply research ideas to industrial systems, such as DataFusion, as doing so provides valuable perspectives when evaluating future research questions for the greatest potential impact.

Footnotes¶

[^5]: There are additional optimizations possible in this operation that the community is working on, such as https://github.com/apache/datafusion/issues/7957.

Comments

We use Giscus for comments, powered by GitHub Discussions. To respect your privacy, Giscus and comments will load only if you click "Show Comments"